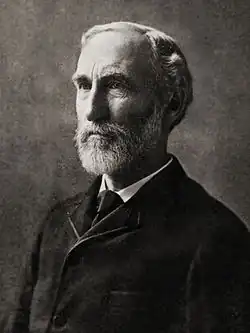

Josiah Willard Gibbs In information theory , Gibbs' inequality is a statement about the information entropy of a discrete probability distribution . Several other bounds on the entropy of probability distributions are derived from Gibbs' inequality, including Fano's inequality .

It was first presented by J. Willard Gibbs in the 19th century.

Suppose that

P

=

{

p

1

,

…

,

p

n

}

{\displaystyle P=\{p_{1},\ldots ,p_{n}\}}

Q

=

{

q

1

,

…

,

q

n

}

{\displaystyle Q=\{q_{1},\ldots ,q_{n}\}}

probability distributions . Then

−

∑

i

=

1

n

p

i

log

p

i

≤

−

∑

i

=

1

n

p

i

log

q

i

{\displaystyle -\sum _{i=1}^{n}p_{i}\log p_{i}\leq -\sum _{i=1}^{n}p_{i}\log q_{i}}

with equality if and only if

p

i

=

q

i

{\displaystyle p_{i}=q_{i}}

i

=

1

,

…

n

{\displaystyle i=1,\dots n}

[ 1] : 68 information entropy of a distribution

P

{\displaystyle P}

cross entropy with any other distribution

Q

{\displaystyle Q}

The difference between the two quantities is the Kullback–Leibler divergence or relative entropy, so the inequality can also be written:[ 2] : 34

D

K

L

(

P

‖

Q

)

≡

∑

i

=

1

n

p

i

log

p

i

q

i

≥

0.

{\displaystyle D_{\mathrm {KL} }(P\|Q)\equiv \sum _{i=1}^{n}p_{i}\log {\frac {p_{i}}{q_{i}}}\geq 0.}

Note that the use of base-2 logarithms is optional, and

allows one to refer to the quantity on each side of the inequality as an

"average surprisal " measured in bits .

Proof

For simplicity, we prove the statement using the natural logarithm, denoted by ln , since

log

b

a

=

ln

a

ln

b

,

{\displaystyle \log _{b}a={\frac {\ln a}{\ln b}},}

so the particular logarithm base b > 11 / ln b .

Let

I

{\displaystyle I}

i

{\displaystyle i}

pi is non-zero. Then, since

ln

x

≤

x

−

1

{\displaystyle \ln x\leq x-1}

x > 0 , with equality if and only if x=1 , we have:

−

∑

i

∈

I

p

i

ln

q

i

p

i

≥

−

∑

i

∈

I

p

i

(

q

i

p

i

−

1

)

{\displaystyle -\sum _{i\in I}p_{i}\ln {\frac {q_{i}}{p_{i}}}\geq -\sum _{i\in I}p_{i}\left({\frac {q_{i}}{p_{i}}}-1\right)}

=

−

∑

i

∈

I

q

i

+

∑

i

∈

I

p

i

=

−

∑

i

∈

I

q

i

+

1

≥

0

{\displaystyle =-\sum _{i\in I}q_{i}+\sum _{i\in I}p_{i}=-\sum _{i\in I}q_{i}+1\geq 0}

The last inequality is a consequence of the pi and qi being part of a probability distribution. Specifically, the sum of all non-zero values is 1. Some non-zero qi , however, may have been excluded since the choice of indices is conditioned upon the pi being non-zero. Therefore, the sum of the qi may be less than 1.

So far, over the index set

I

{\displaystyle I}

−

∑

i

∈

I

p

i

ln

q

i

p

i

≥

0

{\displaystyle -\sum _{i\in I}p_{i}\ln {\frac {q_{i}}{p_{i}}}\geq 0}

or equivalently

−

∑

i

∈

I

p

i

ln

q

i

≥

−

∑

i

∈

I

p

i

ln

p

i

{\displaystyle -\sum _{i\in I}p_{i}\ln q_{i}\geq -\sum _{i\in I}p_{i}\ln p_{i}}

Both sums can be extended to all

i

=

1

,

…

,

n

{\displaystyle i=1,\ldots ,n}

p

i

=

0

{\displaystyle p_{i}=0}

p

ln

p

{\displaystyle p\ln p}

p

{\displaystyle p}

(

−

ln

q

)

{\displaystyle (-\ln q)}

∞

{\displaystyle \infty }

q

{\displaystyle q}

−

∑

i

=

1

n

p

i

ln

q

i

≥

−

∑

i

=

1

n

p

i

ln

p

i

{\displaystyle -\sum _{i=1}^{n}p_{i}\ln q_{i}\geq -\sum _{i=1}^{n}p_{i}\ln p_{i}}

For equality to hold, we require

q

i

p

i

=

1

{\displaystyle {\frac {q_{i}}{p_{i}}}=1}

i

∈

I

{\displaystyle i\in I}

ln

q

i

p

i

=

q

i

p

i

−

1

{\displaystyle \ln {\frac {q_{i}}{p_{i}}}={\frac {q_{i}}{p_{i}}}-1}

and

∑

i

∈

I

q

i

=

1

{\displaystyle \sum _{i\in I}q_{i}=1}

q

i

=

0

{\displaystyle q_{i}=0}

i

∉

I

{\displaystyle i\notin I}

q

i

=

0

{\displaystyle q_{i}=0}

p

i

=

0

{\displaystyle p_{i}=0}

This can happen if and only if

p

i

=

q

i

{\displaystyle p_{i}=q_{i}}

i

=

1

,

…

,

n

{\displaystyle i=1,\ldots ,n}

Alternative proofs

The result can alternatively be proved using Jensen's inequality , the log sum inequality , or the fact that the Kullback-Leibler divergence is a form of Bregman divergence .

Because log is a concave function, we have that:

∑

i

p

i

log

q

i

p

i

≤

log

∑

i

p

i

q

i

p

i

=

log

∑

i

q

i

=

0

{\displaystyle \sum _{i}p_{i}\log {\frac {q_{i}}{p_{i}}}\leq \log \sum _{i}p_{i}{\frac {q_{i}}{p_{i}}}=\log \sum _{i}q_{i}=0}

where the first inequality is due to Jensen's inequality, and

q

{\displaystyle q}

Furthermore, since

log

{\displaystyle \log }

q

1

p

1

=

q

2

p

2

=

⋯

=

q

n

p

n

{\displaystyle {\frac {q_{1}}{p_{1}}}={\frac {q_{2}}{p_{2}}}=\cdots ={\frac {q_{n}}{p_{n}}}}

and

∑

i

q

i

=

1

{\displaystyle \sum _{i}q_{i}=1}

Suppose that this ratio is

σ

{\displaystyle \sigma }

1

=

∑

i

q

i

=

∑

i

σ

p

i

=

σ

{\displaystyle 1=\sum _{i}q_{i}=\sum _{i}\sigma p_{i}=\sigma }

where we use the fact that

p

,

q

{\displaystyle p,q}

p

=

q

{\displaystyle p=q}

Proof by Bregman divergence

Alternatively, it can be proved by noting that

q

−

p

−

p

ln

q

p

≥

0

{\displaystyle q-p-p\ln {\frac {q}{p}}\geq 0}

p

,

q

>

0

{\displaystyle p,q>0}

p

=

q

{\displaystyle p=q}

∑

i

q

i

−

p

i

−

p

i

ln

q

i

p

i

≥

0

{\displaystyle \sum _{i}q_{i}-p_{i}-p_{i}\ln {\frac {q_{i}}{p_{i}}}\geq 0}

p

=

q

{\displaystyle p=q}

This is because the KL divergence is the Bregman divergence generated by the function

t

↦

ln

t

{\displaystyle t\mapsto \ln t}

Corollary

The entropy of

P

{\displaystyle P}

[ 1] : 68

H

(

p

1

,

…

,

p

n

)

≤

log

n

.

{\displaystyle H(p_{1},\ldots ,p_{n})\leq \log n.}

The bound is achieved when

q

i

=

1

/

n

{\displaystyle q_{i}=1/n}

i .

See also

References

^ a b Pierre Bremaud (6 December 2012). An Introduction to Probabilistic Modeling . Springer Science & Business Media. ISBN 978-1-4612-1046-7 . ^ David J. C. MacKay (25 September 2003). Information Theory, Inference and Learning Algorithms . Cambridge University Press. ISBN 978-0-521-64298-9 .